Here is a nice little graphics project: tools for optimizing illusion tattoos like these:

Here is the article that is from.

I suggest if you and some friends do this you all agree to get a tattoo using the tool at paper submission time. I guarantee that is a strong incentive for software quality!

Thursday, November 27, 2014

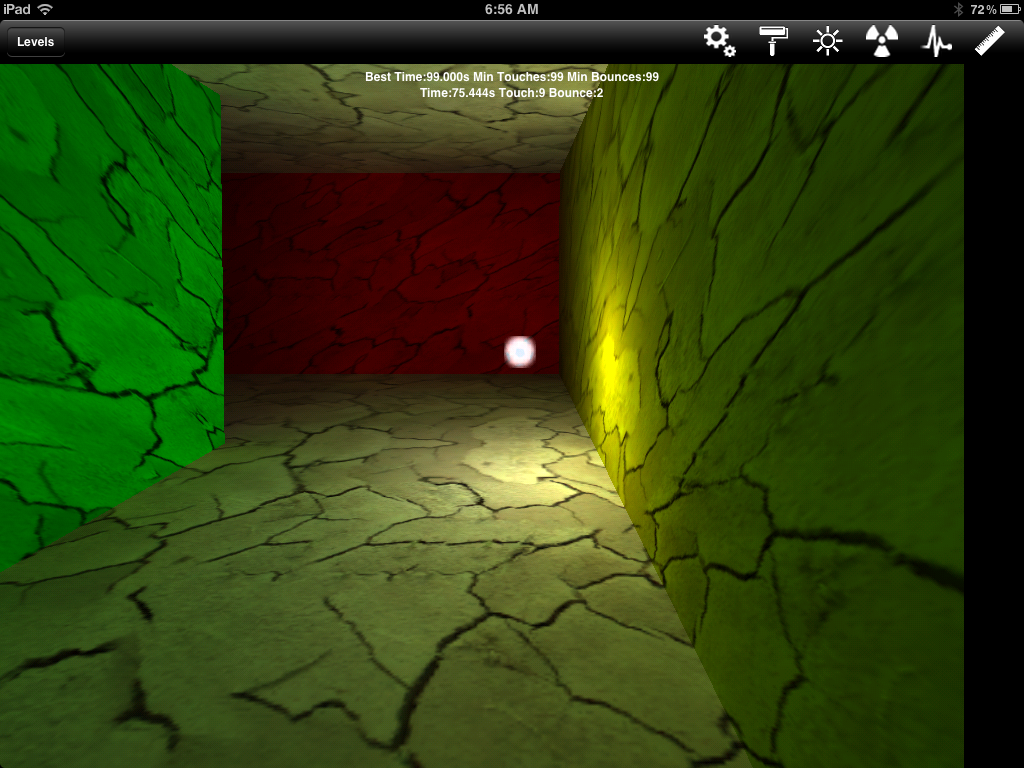

Radiosity on an iPhone

I went to Brad Loos' PhD defense earlier this week (and it and the work presented was excellent; congratulate Brad and remind him that "Dr. Loos" sounds like a James Bond villain). One of the things Brad talked about was modular radiance transport (MRT). This was one of the most important works in rendering to my mind because it was a serious attempt to "make radiosity work" and in my opinion was the most successful one so far. The basic idea is to divide the world into boxes with some number of open interfaces. By some muscular linear algebra and clever representation they make this work very well for environments that are not exactly mazes and have clutter and details and characters. Brad mentioned in his talk that Kenny Mitchell spearheaded an MRT tech demo game that is free on the apple app store. This was done at the time of the work and it even worked on my generation 0 iPad, and here's a screen shot:

It is fluid on that old hardware, and REALLY fluid on my newer phone. Interactive radiosity really does look good (and the underlying approximations can be seen if you pay careful attention, but I would not have guessed this wasn't just a full solution except for how fast it was). Try it-- it's fun!

Here is a video of it running.

Also, there is a full report on all the tech behind it.

It is fluid on that old hardware, and REALLY fluid on my newer phone. Interactive radiosity really does look good (and the underlying approximations can be seen if you pay careful attention, but I would not have guessed this wasn't just a full solution except for how fast it was). Try it-- it's fun!

Here is a video of it running.

Also, there is a full report on all the tech behind it.

Wednesday, November 26, 2014

Secondary light source

One of the most useful assumptions in graphics is that there is "direct" lighting and "indirect" lighting. Mirrors always mess that up. Here is an example of a shadow cast by "indirect" light:

The light comes from a car:

For games and historical visualizations it is probably best to just pretend those secondary specular lights are not there. However, for predictive applications they should be tracked. Dumping the whole concept of "direct" light is not irrational in such cases.

|

| The shadows on the ground are from the Sun which is well above the horizon. The dimmer shadow in the doorway is from a bright "secondary" light. |

The light comes from a car:

|

| The shadow is cast from the highlight on the red car. Interestingly the iPhone camera makes all these bright hightlights about the same, whereas in real life one is MUCH brighter. Clamping FTW! |

Tuesday, November 25, 2014

Photo processing tattoos

Our app extension fixes to SimplePic went live last night (we solved the app store search bug of Pic! by just changing the name. Crude, but effective. The fix to app extension was replacing some of the Swift code with Objective-C... old technology has more warts but fewer bugs). I tested it on Tina Ziemek's tattoo as that is one of the things SimplePic is designed for:

The middle picture is a good example of the tradeoff in changing colors without messing with skin tones too much. Some of the hues change a little as some of that tradeoff. There is most definitely a few SIGGRAPH papers to be had in this domain, and I am not going to pursue it, but if anybody does please keep me apprised on what you find (there is a correlation between tattoos and iPhones so there's a specialized app to be had as well). The posterization I personally use when I want to exaggerate contrast (like a photo of a sign where the colors are blah) so I wouldn't probably do that there.

PS-- Many of you who know Tina, and FYI she's started a game company and has a crowd-funding campaign going. Sign up!

|

| Top: original image. Bottom two output of SimplePic |

PS-- Many of you who know Tina, and FYI she's started a game company and has a crowd-funding campaign going. Sign up!

Monday, November 24, 2014

Strange looking reflection

This one looks to me like a bug in real life.

(I don't mean insect). It is a reflection of this lamp:

(I don't mean insect). It is a reflection of this lamp:

Friday, November 21, 2014

And a mistake in the original ray tracing paper

More fun trivia on the original ray tracing paper. Whitted put in attenuation with distance along the reflection rays:

This is a very understandable mistake. First, that radiance doesn't fall off as distance squared is confusing when you first hit a graphics class, and there were no graphics classes with ideal specular reflection then; Whitted was inventing it! Second, the picture with the bug I think probably looks better than without: the fading checkerboard in the specular reflection looks kind of like a brushed metal effect. So it's a good hack!

There are two lessons to draw from this: when bugs look good think about how to make them into a technique. Second, when you make a mistake in print, don't worry about it: if somebody is pointing it out decades later you probably wrote one of the best papers of all time. And if the paper is not great, it will disappear and nobody will notice the mistake!

This is a very understandable mistake. First, that radiance doesn't fall off as distance squared is confusing when you first hit a graphics class, and there were no graphics classes with ideal specular reflection then; Whitted was inventing it! Second, the picture with the bug I think probably looks better than without: the fading checkerboard in the specular reflection looks kind of like a brushed metal effect. So it's a good hack!

|

| Whitted's famous ray tracing image. From wikipedia |

Thursday, November 20, 2014

Another fun tidbit from the original ray tracing paper

The paper also used bounding volume hierarchies (built by hand). It's amazing how well this paper stands up after so long.

Cloud ray tracing in 2050

My recent reread of Turner Whitted's paper from 35 years ago made me think about what kind of ray tracing one can do 35 years from now. (I am likely to barely see that).

First, how many rays can I send now? Of the fast ray tracers out there I am most familiar with the OptiX one from NVDIA running on their hardware. Ideally you go for their VCA Titan-based setup. My read of their speed numbers is that for "hard" ray sets (incoherent path sets) on real models, that on a signle VCA (8 titans) I can get over one billion rays per second (!).

Now how many pixels do I have now. The new computer I covet has about 15 million pixels. So the VCA machine and OptiX ought to give me around 100 rays per pixel per second even on that crazy hi-res screen. So at 30fps I should be able to get ray tracing with shadows and specular inter-reflections (so Whitted-style ray tracing) at one sample per pixel. And at that pixel density I bet it looks pretty good. (OptiX guys I want to see that!).

How would it be for path-traced previews with diffuse inter-reflection? 100 rays per pixel per second probably translates to around 10 samples (viewing rays) per pixel per second. That is probably pretty good for design previews, so I expect this to have impact in design now, but it's marginal which is why you might buy a server and not a laptop to design cars etc.

In 35 years what is the power we should have available? Extending Moore's law naively is dangerous due to all the quantum limits we hear about, but with Monte Carlo ray tracing there is no reason that for the design preview scenario where progressive rendering is used you couldn't use as many computers as you could afford and where network traffic wouldn't kill you.

The overall Moore's law of performance (yes that is not what the real Moore's Law is so we're being informal) is that historically performance of a single chip has doubled every 18 months or so. The number of pixels has only gone up about a factor of 20 in the last 35 years and there are limits of human resolution there but let's say they go up 10. If the performance Moore's law continues due to changes in computers, or more likely lots of processors on the cloud for things like Monte Carlo ray tracing, then we'll see about 24 doublings which is about 16 million. To check that, Whitted did about a quarter million pixels per hour, so let's call that a million rays an hour which is about 300 rays per second. Our 16 million figure would predict about 4.8 giga rays per second, which for Whitted's scenes I imagine people can easily get now. So what is that in 2050 per pixel (assuming 150 million pixels) we should have ray tracing (on the cloud? I think yes) of 10-20 million paths per second per pixel.

What does this all mean? I think it means unstratified Monte Carlo (path tracing, Metopolis, bidirectional, whatever) will be increasingly attractive. That is in fact true for any Monte Carlo algorithm in graphics or non-graphics markets. Server vendors: make good tools for distributed random number generation and I bet you will increase the server market! Researchers: increase your ray budget and see what algorithms that suggests. Government funding agencies: move some money from hardware purchase programs to server fee grants (maybe that is happening: I am not in the academic grant ecosystem at present).

First, how many rays can I send now? Of the fast ray tracers out there I am most familiar with the OptiX one from NVDIA running on their hardware. Ideally you go for their VCA Titan-based setup. My read of their speed numbers is that for "hard" ray sets (incoherent path sets) on real models, that on a signle VCA (8 titans) I can get over one billion rays per second (!).

Now how many pixels do I have now. The new computer I covet has about 15 million pixels. So the VCA machine and OptiX ought to give me around 100 rays per pixel per second even on that crazy hi-res screen. So at 30fps I should be able to get ray tracing with shadows and specular inter-reflections (so Whitted-style ray tracing) at one sample per pixel. And at that pixel density I bet it looks pretty good. (OptiX guys I want to see that!).

How would it be for path-traced previews with diffuse inter-reflection? 100 rays per pixel per second probably translates to around 10 samples (viewing rays) per pixel per second. That is probably pretty good for design previews, so I expect this to have impact in design now, but it's marginal which is why you might buy a server and not a laptop to design cars etc.

In 35 years what is the power we should have available? Extending Moore's law naively is dangerous due to all the quantum limits we hear about, but with Monte Carlo ray tracing there is no reason that for the design preview scenario where progressive rendering is used you couldn't use as many computers as you could afford and where network traffic wouldn't kill you.

The overall Moore's law of performance (yes that is not what the real Moore's Law is so we're being informal) is that historically performance of a single chip has doubled every 18 months or so. The number of pixels has only gone up about a factor of 20 in the last 35 years and there are limits of human resolution there but let's say they go up 10. If the performance Moore's law continues due to changes in computers, or more likely lots of processors on the cloud for things like Monte Carlo ray tracing, then we'll see about 24 doublings which is about 16 million. To check that, Whitted did about a quarter million pixels per hour, so let's call that a million rays an hour which is about 300 rays per second. Our 16 million figure would predict about 4.8 giga rays per second, which for Whitted's scenes I imagine people can easily get now. So what is that in 2050 per pixel (assuming 150 million pixels) we should have ray tracing (on the cloud? I think yes) of 10-20 million paths per second per pixel.

What does this all mean? I think it means unstratified Monte Carlo (path tracing, Metopolis, bidirectional, whatever) will be increasingly attractive. That is in fact true for any Monte Carlo algorithm in graphics or non-graphics markets. Server vendors: make good tools for distributed random number generation and I bet you will increase the server market! Researchers: increase your ray budget and see what algorithms that suggests. Government funding agencies: move some money from hardware purchase programs to server fee grants (maybe that is happening: I am not in the academic grant ecosystem at present).

Wednesday, November 19, 2014

Path tracing preview idea

In reviewing papers from the 1980s I thought again of my first paper submission in 87 or so. It was a bad idea and rightly got nixed. But I think it is now a good idea. Anyone who wants to pursue it please go for it and put me in the acknowledgements: I am working on 2D the next couple of years!

A Monte Carlo ray tracer samples a high dimensional space. As Cook pointed out in his classic paper, it makes practical sense to divide the space into 1D and 2D subspaces and parametrize them to [0,1] for conceptional simplicity. For example:

All sorts of fun and productive games are played to make the multidimensional properties of the samples for sets across those dimensions, with QMC making a particular approach to that, and Cook suggesting magic squares. The approach in that bad paper was to pretend there is no correlation at between the pairs of dimensions. So use:

The samples can be regular or jittered-- just subdivide when the 4-connected or 8-connected neighbors don't match. Start with 100 or so samples per pixel.

Yes this approach has artifacts, but often now Monte Carlo is used for a noisy preview and the designer hits "go" for a noisy version when the setup is right. The approach above will instead give artifacts but the key question for preview (a great area to research now) is how to get the best image for the designer as the image progresses.

Note this will be best for one bounce of diffuse only but that is often what one wants, and it is another artifact to replace multi-bounce with an ambient. The question for preview is how best to tradeoff artifacts for time. Doing it unbiased just makes the artifact noise.

Let me know if anybody tries it. If you have a Monte Carlo ray tracer it's a pretty quick project.

A Monte Carlo ray tracer samples a high dimensional space. As Cook pointed out in his classic paper, it makes practical sense to divide the space into 1D and 2D subspaces and parametrize them to [0,1] for conceptional simplicity. For example:

- camera lens

- specular reflection direction

- diffuse reflection direction (could be combined with #3 but in practice don't for glossy layered)

- light sampling location/direction

- pixel area

- time (motion blur)

- (u1, u2)

- (u3, u4)

- (u5, u6)

- (u7, u8)

- (u9, u10)

- u11

All sorts of fun and productive games are played to make the multidimensional properties of the samples for sets across those dimensions, with QMC making a particular approach to that, and Cook suggesting magic squares. The approach in that bad paper was to pretend there is no correlation at between the pairs of dimensions. So use:

- (u1, u2)

- (u1, u2)

- (u1, u2)

- (u1, u2)

- (u1, u2)

- u1

The samples can be regular or jittered-- just subdivide when the 4-connected or 8-connected neighbors don't match. Start with 100 or so samples per pixel.

Yes this approach has artifacts, but often now Monte Carlo is used for a noisy preview and the designer hits "go" for a noisy version when the setup is right. The approach above will instead give artifacts but the key question for preview (a great area to research now) is how to get the best image for the designer as the image progresses.

Note this will be best for one bounce of diffuse only but that is often what one wants, and it is another artifact to replace multi-bounce with an ambient. The question for preview is how best to tradeoff artifacts for time. Doing it unbiased just makes the artifact noise.

Let me know if anybody tries it. If you have a Monte Carlo ray tracer it's a pretty quick project.

Tuesday, November 18, 2014

Choosing research topics

A graduate student recently asked me for some advice on research and I decided to put some of the advice down here. One of the hardest things to do in research is figure out what to work on. I reserve other topics like how to manage collaborations for another time.

What are you good at?

This should be graded on the curve. Obvious candidates are:

What do you like?

This is visceral. When you have to make a code faster is it fun or a pain? Do you like making 2D pictures? Do you like wiring novel hardware together?

Where is the topic in the field ecosystem?

How hot is the topic, and if hot will it be played out soon? If lots of people are already working on it you may be too late. But this is impossible to predict adequately. Something nobody is working or worse something out of fashion on is hard to get published, but find a minor venue or tech report and it will get rewarded, sometimes a lot, later.

What is my advantage on this project?

If the project plays to your strengths then that is a good advantage (see "what I am good at" above). But access to unique data, unique collaborators, or unique equipment is a lot easier than being smarter than other people. Another advantage can be to just recognize something is a problem before other people do. This is not easy, but be on the lookout for rough spots in doing your work. In the 1980s most rendering researchers produced high dynamic range images, and none of us were too sure what to do with them, so we scaled. Tumblin and Rushmeier realized before anybody else that this wasn't an annoyance: it is a research opportunity. That work also is an example of how seminal work can be harder to publish but does get rewarded in the long run, and tech reporting it is a good idea so you get unambiguous credit for being first!

Is the project something that will be used in industry tomorrow, in a year, 3 years, 10 years? There should be a mix of these in your portfolio as well, but figure out which appeals to you personally and lean in that direction.

Suppose you have a research topic you are considering:

Suppose you DON'T have a project in mind and that is your problem. This is harder, but these tools can still help. I would start with #6. What is your edge? Then look for open problems. If you like games, look for what sucks in games and see if you bring anything to the table there. If your lab has a great VR headset, think about whether there is anything there. If you happen to know a lot about cars, see if there is something to do with cars. But always avoid the multi-year implimentational projects with no clear payoff unless the details of that further your career goals. For example, anyone that has written a full-on renderer will never lack for interesting job offers in the film industry. If you are really stuck just embrace Moore's law and project resources out 20 years. Yes it will offend most, reviewers but it will be interesting. Alternatively, go to a department that interests you, go to the smartest person there, and see if they have interesting projects they want CS help with.

Great work is sometimes done in grad school. But it's high-risk high-reward so it's not wise to do it unless you aren't too particular about career goals. The good news in CS is that most industry jobs are implicitly on the cutting edge so it's always a debate whether industry or academics is more "real" research (I think it's a tie but that's opinion). But be aware of the trade-off you are making. I wont say it can't be done (Eric Veach immediately comes to mind) but I think it's better to develop a diversified portfolio and have at most one high-risk high-reward project as a side project. In industrial research you can get away with such research (it's exactly why industrial research exists) and after tenure you can do it, but keep in mind your career lasts 40-50 years, so taking extreme chances in the first 5-10 is usually unwise.

What are your career goals?

To decide what to work on what on, first decide what goal any given piece of work leads to. I roughly divide career paths for researchers into these:- Academic researcher

- Academic teacher

- Industrial researcher

- Industrial advanced development

- start-up company

What are you good at?

This should be graded on the curve. Obvious candidates are:

- math

- systems programming

- prototyping programming and toolkit use

- collaborating with people from other disciplines (biology, econ, archeology)

What do you like?

This is visceral. When you have to make a code faster is it fun or a pain? Do you like making 2D pictures? Do you like wiring novel hardware together?

Where is the topic in the field ecosystem?

How hot is the topic, and if hot will it be played out soon? If lots of people are already working on it you may be too late. But this is impossible to predict adequately. Something nobody is working or worse something out of fashion on is hard to get published, but find a minor venue or tech report and it will get rewarded, sometimes a lot, later.

How does it fit into your CV portfolio?

This is the most important point in this post. Look at your career goals and figure out what kind of CV you need. Like stocks and bonds in the economic world, each research project is a risk (or it wouldn't be research!) and you want a long term goal reached by a mix of projects. Also ask yourself how much investment is needed before you know whether a particular project has legs and will result in something good.What is my advantage on this project?

If the project plays to your strengths then that is a good advantage (see "what I am good at" above). But access to unique data, unique collaborators, or unique equipment is a lot easier than being smarter than other people. Another advantage can be to just recognize something is a problem before other people do. This is not easy, but be on the lookout for rough spots in doing your work. In the 1980s most rendering researchers produced high dynamic range images, and none of us were too sure what to do with them, so we scaled. Tumblin and Rushmeier realized before anybody else that this wasn't an annoyance: it is a research opportunity. That work also is an example of how seminal work can be harder to publish but does get rewarded in the long run, and tech reporting it is a good idea so you get unambiguous credit for being first!

How hard is it?

Will it take three years full time (like developing a new OS or whatever) or is it a weekend project? If it's a loser, how long before I have a test that tells me it's a loser?What is the time scale on this project?

Is the project something that will be used in industry tomorrow, in a year, 3 years, 10 years? There should be a mix of these in your portfolio as well, but figure out which appeals to you personally and lean in that direction.

Now let's put this into practice.

Suppose you have a research topic you are considering:

- Does it help my career goals?

- Am I good at it?

- Will it increase my skills?

- Will it be fun?

- Is the topic pre-fashion, in-fashion, or stale?

- What is my edge in this project?

- Where is it in the risk/reward spectrum?

- How long before I can abandon this project?

- Is somebody paying me for this?

- Is this project filling out a sparse part of my portfolio?

Suppose you DON'T have a project in mind and that is your problem. This is harder, but these tools can still help. I would start with #6. What is your edge? Then look for open problems. If you like games, look for what sucks in games and see if you bring anything to the table there. If your lab has a great VR headset, think about whether there is anything there. If you happen to know a lot about cars, see if there is something to do with cars. But always avoid the multi-year implimentational projects with no clear payoff unless the details of that further your career goals. For example, anyone that has written a full-on renderer will never lack for interesting job offers in the film industry. If you are really stuck just embrace Moore's law and project resources out 20 years. Yes it will offend most, reviewers but it will be interesting. Alternatively, go to a department that interests you, go to the smartest person there, and see if they have interesting projects they want CS help with.

Publishing the projects

No matter what you do, try to publish the project somewhere. And put it online. Tech report it early. If it isn't cite-able, it doesn't exist. And if you are bad at writing, start a blog or practice some other way. Do listen to your reviewers but no like it is gospel. Reviewing is a noisy measurement and you should view it as such. And if your work has long term intact the tech report will get read and cited. If not, it will disappear as it should. The short-term hills and valleys will be annoying but keep in mind that reviewing is hard and unpaid and thus rarely done well-- it's not personal.But WAIT. I want to do GREAT work.

Great work is sometimes done in grad school. But it's high-risk high-reward so it's not wise to do it unless you aren't too particular about career goals. The good news in CS is that most industry jobs are implicitly on the cutting edge so it's always a debate whether industry or academics is more "real" research (I think it's a tie but that's opinion). But be aware of the trade-off you are making. I wont say it can't be done (Eric Veach immediately comes to mind) but I think it's better to develop a diversified portfolio and have at most one high-risk high-reward project as a side project. In industrial research you can get away with such research (it's exactly why industrial research exists) and after tenure you can do it, but keep in mind your career lasts 40-50 years, so taking extreme chances in the first 5-10 is usually unwise.

Monday, November 17, 2014

Sunday, November 16, 2014

A fun tidbit from the original ray tracing paper

We're about 35 years after Whitted's classic ray tracing paper. It's available here. One thing that went by people at the time is he proposed randomized glossy reflection in it.

Always be on the lookout for things current authors say are too slow!

Note that Rob Cook, always a careful scholar, credits Whitted for this idea in his classic 1984 paper.

Always be on the lookout for things current authors say are too slow!

Note that Rob Cook, always a careful scholar, credits Whitted for this idea in his classic 1984 paper.

Friday, November 14, 2014

NVIDIA enters cloud gaming market

NVIDIA has fired a big shot into the cloud gaming world. I'll watch this with great interest. If the technology is up to snuff this is the way I would like to play games: subscription model. One catch is that you need to buy a NVIDIA shield (This looks to be around $200) so I think of this as a cloud-based console and it's not at all out of whack with console costs. The better news is I wouldn't have to pay $50 to try a game. I think the key factors will be:

- What games are available and what is the price to try them and/or play them?

- Is the tech good?

- In my internet good enough?

Thursday, November 13, 2014

Converting Spectra to XYZ/RGB values

A renderer that uses a spectral representation must at some point convert to some image format. One can keep spectral curves in each pixel but most think that is overkill. Instead people usually convert to tristimulous values XYZ. These approximate the human response to spectra and there are a couple of versions of the standard with different viewing conditions. But all of the components of them are computed using some integrated weighting function:

Response = INTEGRAL weightingFunction * spectral radiance

The weighting function is typically some big ugly table prone to typos. Here is the first small fraction of such a table from my old code.

I whined about this a lot to Chris Wyman who found a very nice analytic approximation to the XYZ weighting functions he published in this paper (the only paper I have ever been on where my principle contribution was complaining). His simplest approximation has an RMS error of about 1% (and errors in the data itself and however almost all monitors are calibrated is much worse than that so I think the simple approximation is plenty):

However, his multilobe approximation is more accurate and makes for pretty nice code:

For any graphics application I would always use one of these approximations. If you need scotopic luminance for some night rendering you can either type in that formula or use this approximation from one on Greg Ward's papers:

I leave in that last paragraph from Greg's paper to emphasize we are lucky to now have sRGB as the defacto RGB standard. We do want to convert XYZ to RGB for display, and sRGB is the logical choice.

Response = INTEGRAL weightingFunction * spectral radiance

The weighting function is typically some big ugly table prone to typos. Here is the first small fraction of such a table from my old code.

I whined about this a lot to Chris Wyman who found a very nice analytic approximation to the XYZ weighting functions he published in this paper (the only paper I have ever been on where my principle contribution was complaining). His simplest approximation has an RMS error of about 1% (and errors in the data itself and however almost all monitors are calibrated is much worse than that so I think the simple approximation is plenty):

However, his multilobe approximation is more accurate and makes for pretty nice code:

For any graphics application I would always use one of these approximations. If you need scotopic luminance for some night rendering you can either type in that formula or use this approximation from one on Greg Ward's papers:

I leave in that last paragraph from Greg's paper to emphasize we are lucky to now have sRGB as the defacto RGB standard. We do want to convert XYZ to RGB for display, and sRGB is the logical choice.

Tuesday, November 11, 2014

Making an orthonormal basis from a unit vector

Almost all ray tracing programs have a routine that creates an orthonormal basis from a unit vector n. I am messing with my Swift ray tracer and just looked around for the "best known method". This routine is always annoyingly inelegant in my code so I was hoping for something better. Some googling yeilded a lovely paper by Jeppe Revall Frisvad. He builds the basis with no normalization required:

It's not obvious, but those are orthonormal. The catch is the case where 1-nz = 0 so unfortunately an if is needed:

Still even with the branch it is very nice. I was hoping that a careful use of IEEE floating point rules would allow the branch to be avoided but I don't see it. But perhaps some clever person will see a reconstructing. The terms 0*0/0 should be a zero in principle and then all works out. The good news is that for n.z near 1.0 the stability looks good.

It's not obvious, but those are orthonormal. The catch is the case where 1-nz = 0 so unfortunately an if is needed:

Still even with the branch it is very nice. I was hoping that a careful use of IEEE floating point rules would allow the branch to be avoided but I don't see it. But perhaps some clever person will see a reconstructing. The terms 0*0/0 should be a zero in principle and then all works out. The good news is that for n.z near 1.0 the stability looks good.

A more complex quartz composer example

Here is my first "real" quartz composer prototype. I duplicated the spirit of our kids' photo app 'dipityPix. This means 4 moving sprites that randomly adjusts posterization thresholds when hit with a mouse.

First I use an interaction routine for each sprite that logs mouse events. These are only live if there is a link between the interaction routine and the sprite (which is just a connection you make with the mouse). The sprite has one of two images depending on whether the mouse is pressed. Again this is just making the appropriate links.

Second we need random numbers. The Random routine just spews a stream of random numbers and we only want when when the mouse is pressed for that ball. Thus we "sample and hold" it when the number of mouse click changes (very basic dataflow concept and surprisingly hard for me to get used to because state is more "in your face" in C-like languages).

And that's it. I can see why people love the environment for prototyping.

For learning these basics the best resource I found was this excellent series of youtube videos by Rob Duarte. And here is the code for the example above.

First I use an interaction routine for each sprite that logs mouse events. These are only live if there is a link between the interaction routine and the sprite (which is just a connection you make with the mouse). The sprite has one of two images depending on whether the mouse is pressed. Again this is just making the appropriate links.

Second we need random numbers. The Random routine just spews a stream of random numbers and we only want when when the mouse is pressed for that ball. Thus we "sample and hold" it when the number of mouse click changes (very basic dataflow concept and surprisingly hard for me to get used to because state is more "in your face" in C-like languages).

And that's it. I can see why people love the environment for prototyping.

For learning these basics the best resource I found was this excellent series of youtube videos by Rob Duarte. And here is the code for the example above.

Using Quartz Composer

I wanted to prototype some apps on a Mac and Alex Naaman suggested I give Quartz Composer a try and he helped me get started with Core Image shaders as well (thanks Alex!). My experience so far is this is a great environment. Facebook has embraced Quartz Composer and made an environment on top of it for mobile app prototyping called Origami.

Quartz Composer is a visual dataflow environment that is similar in spirit to AVS or SCIRun or Helix for you Mac old-timers. What is especially fun about it is that "hello world" is so easy. Here is a program to feed the webcam to a preview window with a Core Image shader posterizing it:

Quartz Composer is a visual dataflow environment that is similar in spirit to AVS or SCIRun or Helix for you Mac old-timers. What is especially fun about it is that "hello world" is so easy. Here is a program to feed the webcam to a preview window with a Core Image shader posterizing it:

The "clear" there is layer 1 (like a Photoshop layer) and just gives a black background. The video input is the webcam. The Core Image filter is a GLSL variant. A cool thing is that if you add arguments to the filter, input ports are automatically added.

To program that filter you hit "inspect" on the filter and you can type stuff right in there.

The code for this example is here. You can run it on a mac with quicktime player, but if you want to modify it you will need to install xcode and the quartz composer environment.

Monday, November 10, 2014

Caustic from a distance

I spotted the strange bright spot in a parking lot and couldn't figure out where it was coming from. It is shown on the left below. Squatting down the source was clear as shown on the right. This is a good example of a small measure path that can make rendering hard!

Subscribe to:

Posts (Atom)