"get ith point in square" or "get N points in square" function. The square is usually [0,1)^2. These always seem to add more ugliness to programs than I would expect, but we'll save that for another time. Additionally, it's really a multidimensional sampling (i.e., antialised motion-blur is 3D) a topic we will also defer.

There are five basic strategies for getting 2D samples on the unit square:

- Regular (lattice, sometimes rotated.)

- Random

- Jittering (stratified sampling)

- QMC

- Optimized

Jittered is usually done for perfect square number of samples because it is a SxS grid that "jittered" or perturbed. Pseudocode for Jittered for S^2 samples (e.g, for S = 4, 16 samples) is:

Vec2 get_sample(int s, int sqrt_num_samples)

float i = s % sqrt_num_samples

float j = s / sqrt_num_samples

return Vec2((i+drand48())/ sqrt_num_samples, (i+drand48())/ sqrt_num_samples)

If we are going to sample a disk (like a lens) people typically sample a unit disk centered at (0,0) with radius 1. If we transform a sample (u,v) on [0,1)^2 to a disk we can't do this:

Vec2 transform_to_disk(Vec2 on_square)

theta = 2*PI*on_square.x

r = on_square.y

return (r*cos(theta), r*sin(theta))

Because this will clump up samples near r=0. Instead we need r = sqrt(on_square.y) which compensates for the distortion.

However, there is a "concentric" mapping on the square that some say is better and Dave Cline sent me (see previous blog post) a nicely small code for that so I finally tried it.

Here is an image with random sampling and 25 samples per pixel.

|

| Random sampling 25 samples per pixel |

|

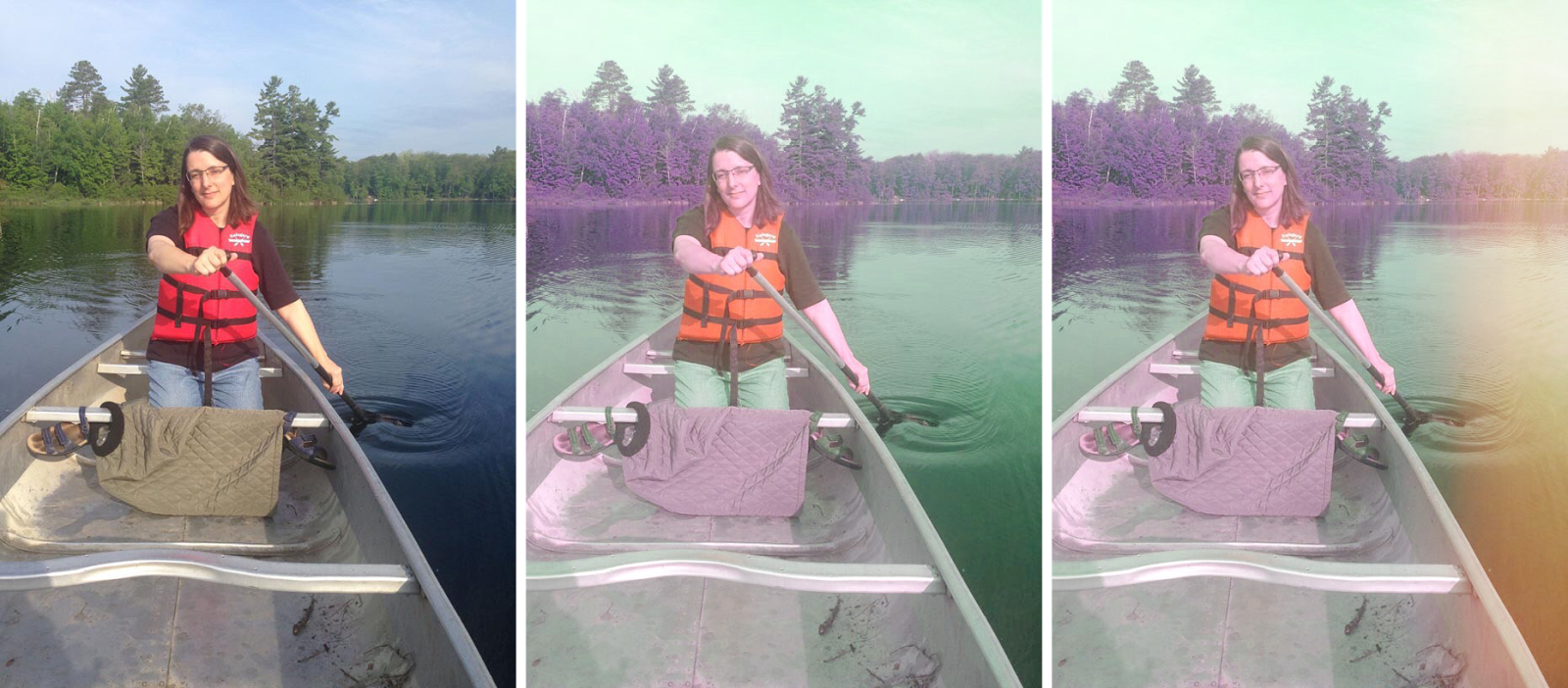

| 25 samples per pixel, random, jittered, and jittered with concentric map. |

|

| 49 samples per pixel, random, jittered, and jittered with concentric map. |

|

| 100 samples per pixel, random, jittered, and jittered with concentric map. |