Apple recently added

photo app extensions to iOS, and we have added this feature to all our

-Pic apps. The thing that excites me the most about this is you can easily use a series of apps grabbing their best feature for a fluid editing pipeline. To experiment with these emerging pipelines I have grabbed all the photo apps supporting extensions I can find. Some of them don't work for me and I don't list those here (and stability of app extensions are improving so I will add them as they or Apple fix the issues). I list them here, most of them with my test on a feature from each of them along with one of our apps.

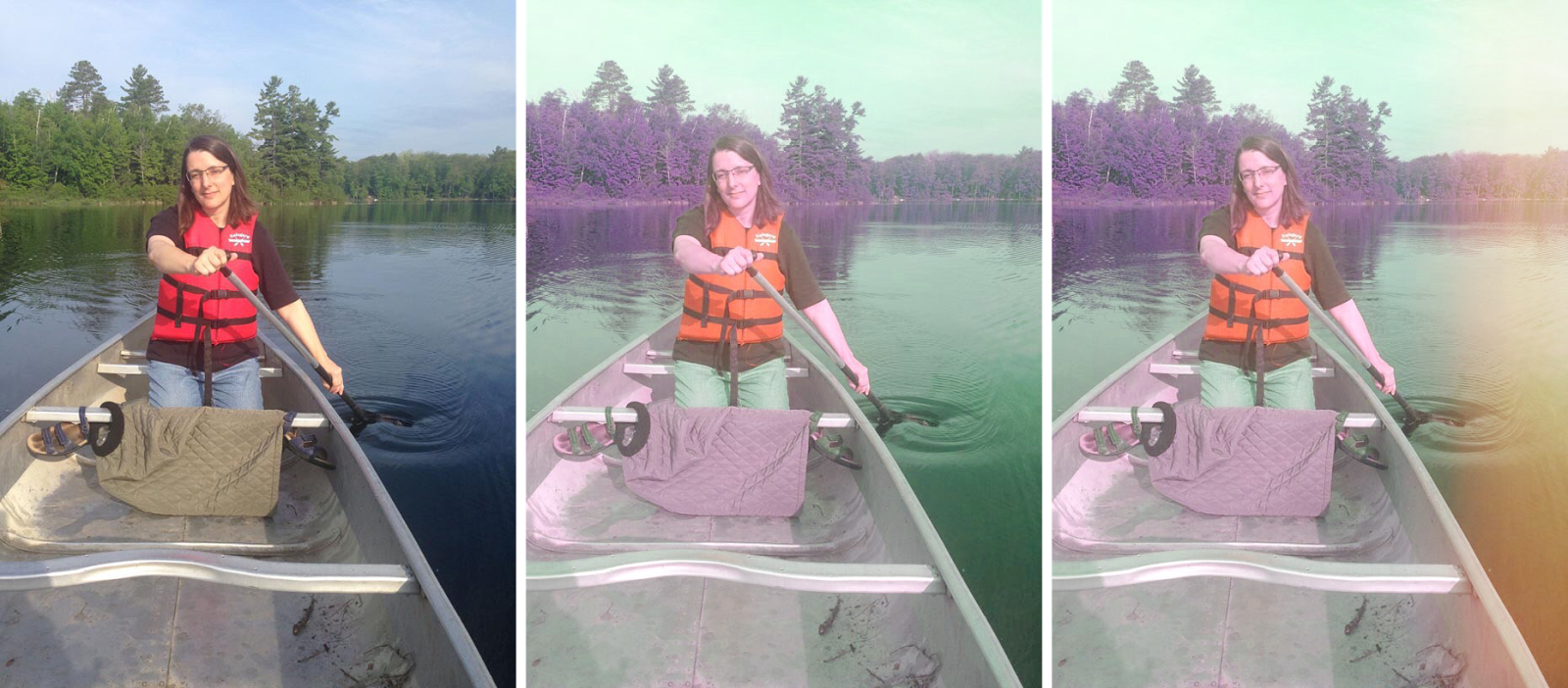

Afterlight is a popular general purpose app. One of its most unique features is a big set of light leak effects. Here it is being used with

PicJr (a kids app with some over-the-top photo transforms) to make a very damaged old photo.

|

| Left to right: original image, faded and distorted by PicJr, light leak using Afterlight. |

Camera Plus is a powerful general purpose apps and I especially like their frames and lab features.

ChowPic, by my company Purity, LLC, improves food photos.

Effects Studio has several neat specialized features and I am especially fond of its sharpen feature in conjunction with our

StencilPic app.

Etchings is an NPR app that does engraving like effects mostly in bitone but some with color. I am very impressed with their stoke generation! I've enjoyed using it in series with our own

StencilPic.

Filters+ has some unusual manipulations I don't see in any other apps such as "make a greyscale image from the max RGB component". I have found these useful to get some good stencils from images that are otherwise problematic such as the one below where popping out the max component helps separate out the more saturated foreground. Another cool thing about Filters+ is that it only runs as an app extension-- the app just opens a user guide.

|

Original image processed to get max RGB component in Filters+ and then sent to StencilPic

|

Flare Effects has many vintage and real camera filters. I like to use the old CCTV to get scanlines and then stencil them for some cool aliasing color effects.

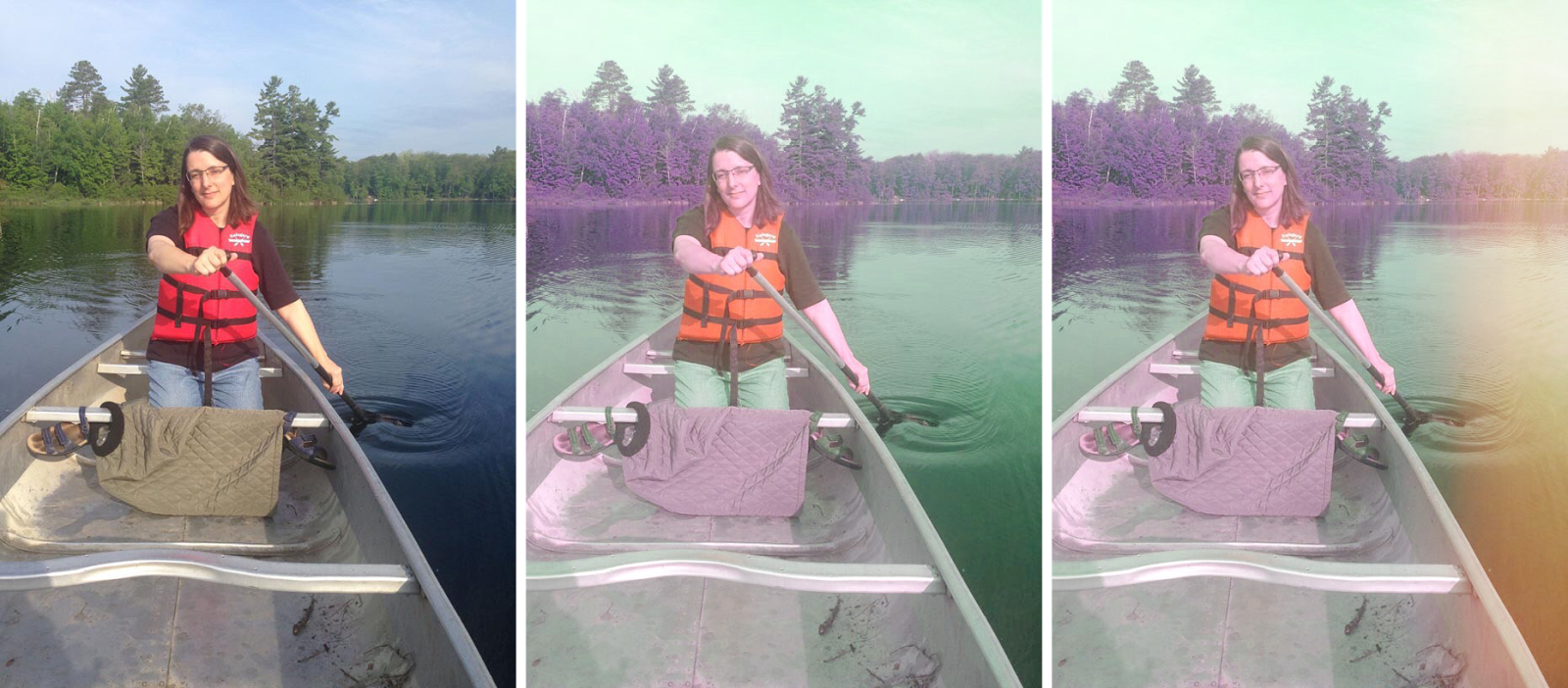

Fotograph has a number of thumbnail and slider-based adjustments. My favorite is their color temperature and saturation sliders which are

very stable into even extreme territory.

|

| Original photo, cool temperature, and large saturation in Fotograph app |

Fragment has many kaleidascope inspired effects. Of course I like to stencil them.

LandscapePic is our own app that like all of those here also runs as an app extension. It can ignore preserving skintones so it can let you turn everything to 11 (but you don't have to).

MePic is designed to change skintone and contrast in selfies.

|

| Original and three variations in MePic. Original photo courtesy Parker Knight at flickr. |

Paper Camera is an NPR app with a clever interface of three sliders and a set of preset positions for them. It's of course good for a stencil pre-process too.

Photo Wizard has a variety of filters and effects and also has stickers and drawing functionality.

ProCam is a full-featured app with some very nice UI. The tilt-shift has an especially nice pinch interface.

Quick applies text on images with a nice simple interface.

Rookie has many filters including some that add spatial effects such as the rain below.

SepiaPic selects from four basic palettes and can combine the original image and the sepiatone.

SimplePic is our general purpose simple photo editor. You have three screens to adjust contrast, color temperature, and saturation.

TattooPic is tailored to making tattoos look better. This is the sort of special purpose app that runs as an extension we expect to get more common.

That is all the photo apps with extensions (that don't crash) that I could find. Let me know if I am missing any!